With last week’s release of GPT‑5, OpenAI has delivered what it says is its best model yet for coding. And now that GPT‑5 is available in Beekeeper Studio’s AI Shell, it’s a good time to look at what it could mean for your SQL workflows.

Let’s start with a recap of what OpenAI highlights in its Introducing GPT-5 for developers announcement:

- More reliable code generation: GPT‑5 follows prompts and formatting rules more consistently, making SQL, config, and script output cleaner and more predictable.

-

Lower latency when needed: A new

reasoning_effortAPI setting lets you trade depth for speed. - Handles complex, longer tasks more effectively: With a 256k-token context window and improved long-range reasoning, OpenAI reports that GPT‑5 performs better across multi-step prompts and extended sessions.

- Greater control over output: New API options like verbosity help tailor responses to your needs, from terse code to detailed explanation.

- Fewer hallucinations: OpenAI reports a ~80% reduction in factual errors compared to GPT‑4 on internal benchmarks, suggesting more dependable responses for technical tasks.

In practice, when working with SQL, that could mean GPT-5 keeps more of your schema and query history in context, leading to more predictably useful queries and analysis. Here’s what that might look like in Beekeeper Studio’s AI Shell.

Larger context window

When it comes to retaining more of what you’re working on (such as schema, queries, or multi-step discussion—context) window size matters. GPT‑5 gives you 256k tokens, a decent upgrade over GPT‑4o’s 128k. But that’s a quarter of Claude 4 Sonnet and Gemini 2.5 Pro’s 1 million-token window.

So let’s try to put it into concrete terms.

A 256k-token window is enough for GPT-5 to keep a full database schema, a reasonable history of queries and results, and reference notes in memory during a session. That’s useful if you’re iterating on a problem without wanting to restate table structures or past steps.

By contrast, 1 million-token models like Claude 4 Sonnet or Gemini 2.5 Pro can hold substantially more supporting material at once. For example, broader documentation sets or multiple interconnected schemas but the trade-off may be cost and speed.

That makes GPT-5’s larger context window meaningfully more useful than GPT-4o’s but if context window size is your priority, you might need to reach for Claude or Gemini.

There is, though, a caveat. A larger context window doesn’t just mean more room for schema and history; it also means more tokens being processed with each request. And that could lead you to burn quickly through your credits.

Per token costs are also higher with some of the newer models. GPT-5 itself is cheaper than GPT-4o (about $1.25 per million input tokens and $10 per million output tokens), but if you step up to 1-million-token models like Claude Sonnet 4 or Gemini 2.5 Pro you’re looking at roughly 2–5× higher costs.

Improved accuracy

OpenAI reports that GPT-5 produces roughly 80% fewer factual errors than GPT-4 in its internal coding benchmarks. In AI Shell, we already minimize the risk of mistakes by sharing your schema and query history with the LLM but, even so, complex queries can still trip up a model.

If GPT-5’s improvements hold true for SQL, you should see fewer of those edge-case missteps, such as misapplied functions or incorrect joins.

More consistent instruction following

OpenAI says GPT-5 is better at following instructions and sticking to requested structures. While AI Shell already applies its own guardrails to ensure output is well-formed, this could further reduce the need for post-processing or retries in cases where the request is more complex or unusual.

Speed vs. depth (reasoning_effort)

GPT-5 introduces a reasoning_effort parameter that allows developers to trade processing time for more detailed reasoning. AI Shell doesn’t yet expose this control, but it could become relevant if there’s demand for tuning between faster responses and more thorough step-by-step reasoning.

What GPT-5 adds up to for SQL

Taken together, GPT-5’s improvements suggest it should act as a more capable assistant for day-to-day SQL work.

The larger context window means it can keep more of your schema, query history, and notes in view, reducing the need to repeat yourself.

Better accuracy could mean fewer small but costly mistakes in complex joins or function calls.

Improved instruction following should make it easier to get exactly the output you want, even for edge case requests.

If you want to see GPT-5, Claude, Gemini, local Ollama models, or any OpenAI-compatible API model in action, you can connect them to Beekeeper Studio’s AI Shell today.

Get started with Beekeeper Studio’s AI Shell

If you have a paid license, getting started is easy—just head to the AI Shell docs.

New to Beekeeper Studio? Download it today to start your free trial.

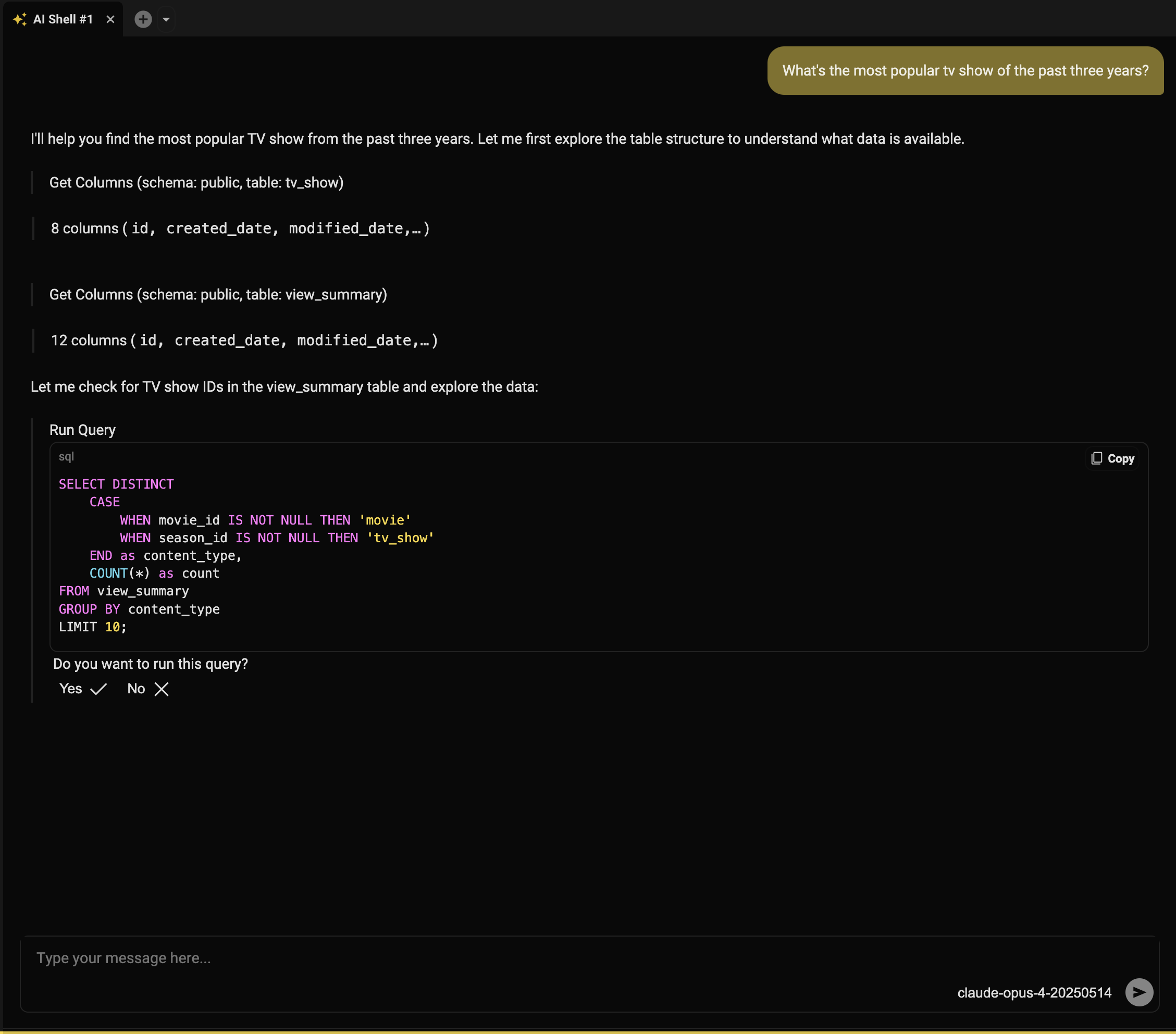

Update to the latest version and connect to your database as usual. Where you’d normally open a new query tab, you’ll now see a dropdown to choose either a standard query tab or an AI Shell.

Open an AI Shell and ask a question as you would with any LLM interface. Beekeeper Studio works with your model to generate a query that answers it. If it’s not quite right, you can refine the prompt, edit the SQL, or ask follow-ups. You’re always in control—nothing runs until you approve it.

Get started with Beekeeper Studio today

Beekeeper Studio is fast, open source, and cross-platform, with support for Postgres, MySQL, SQLite, MongoDB, and more. And now, with a schema-aware AI pair programmer built in.

The best way to see if it fits your workflow? Try it for yourself.