Your favorite LLM is great at writing SQL but it knows nothing about your data. That’s the gap that Beekeeper Studio’s AI Shell closes. It’s a privacy-first, conversational interface that acts as your AI SQL pair programmer, helping you generate SQL queries tailored to your actual schema.

tl;dr: What you can do with Beekeeper Studio’s AI Shell

- Chat with your database: Ask plain English questions of your data and get well-crafted SQL queries back.

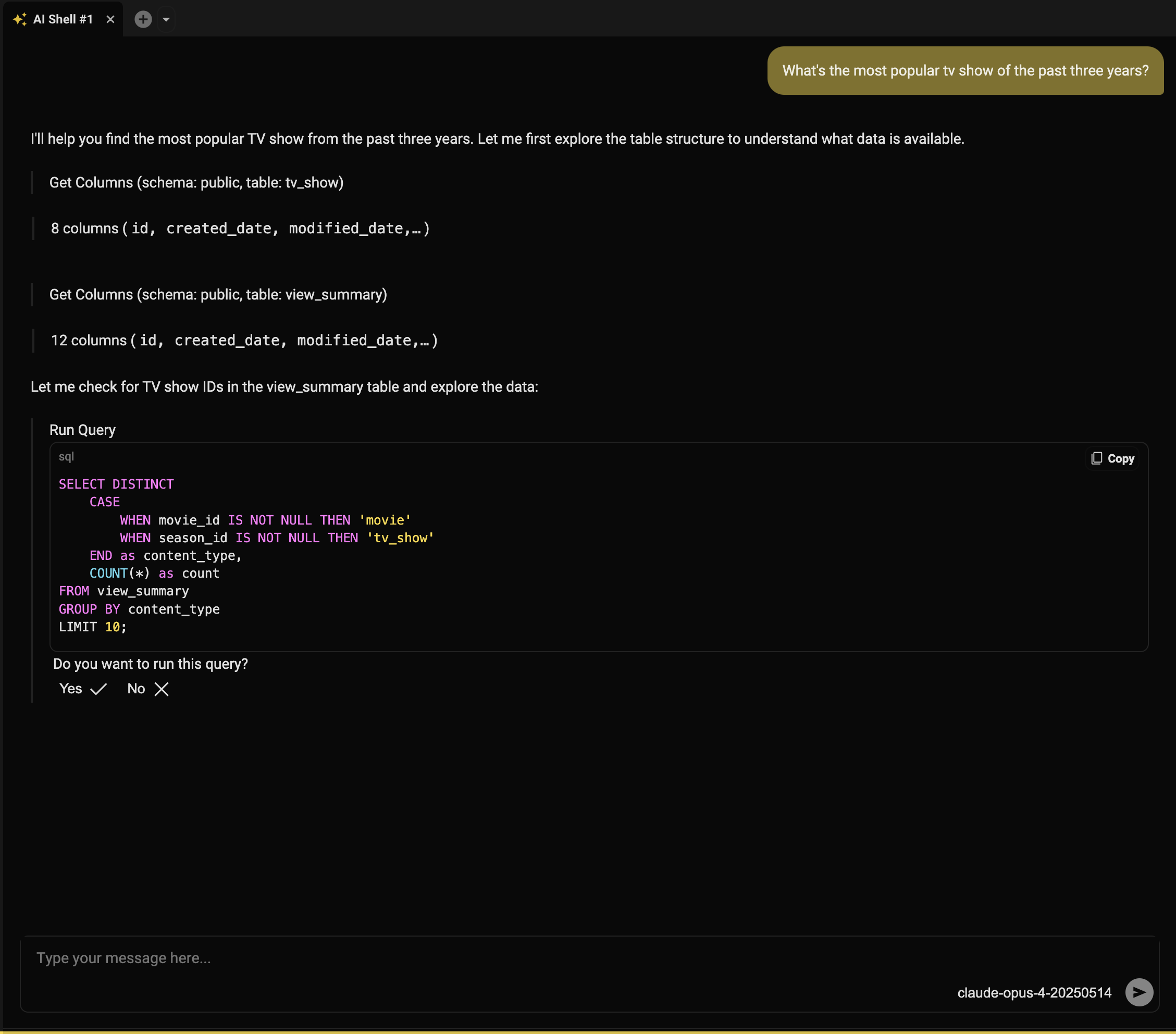

- Dive straight in: With your permission, the AI Shell runs exploratory queries to learn how your tables are structured and related. It uses that knowledge to generate more accurate, context-aware SQL without you needing to explain anything.

- Connect directly to your chosen LLM: Works with any model that supports the OpenAI API: Claude, OpenAI, Gemini, or local models. You bring your own key; Beekeeper never proxies or charges extra.

- Keep control: The AI Shell suggests queries but you decide what gets executed.

- Use it today: Available now in all paid plans, with no extra cost beyond your LLM’s standard API charges.

What Beekeeper Studio’s AI Shell does

Agentic coding tools like Claude Code and Cursor can already reason across an entire codebase. And that’s what Beekeeper Studio’s AI Shell does for your data. Rather than have you paste table structure or sample data into ChatGPT, the AI Shell shares your database schema with your chosen LLM so that it can understand your actual structure: tables, columns, and relationships.

That means you can ask things like:

“How many customers churned last quarter, grouped by plan type?”

and get a runnable SQL query based on the actual shape of your data. Whether it’s a new dataset or your primary project, you can get productive faster without stopping to look up joins, column names, or table structures.

Secure, private, and with you in control

Nobody wants a black box executing SQL on their data.

So, Beekeeper Studio’s AI Shell makes sure the LLM never interacts directly with your data. Instead, you can review every SQL statement it generates before you decide whether to run it.

And because you connect your favorite LLM, Beekeeper Studio doesn’t proxy or track your queries, answers, or any of your data. You can confirm that in the source code.

Get started with Beekeeper Studio’s AI Shell

If you have a paid license, getting started is easy: head into the AI Shell docs.

New to Beekeeper Studio? Download today to start your free trial.

Update to the latest version and connect to your database as normal. Now, where you’d usually open a new query tab, you’ll see a dropdown menu where you can open either a standard query tab or an AI Shell.

Open an AI Shell and ask a question, just as you would with any other LLM-powered conversational interface. Beekeeper Studio works with your LLM to generate a query that starts answering your question. If the query isn’t quite right, you can tweak the prompt, edit the SQL directly, or ask follow-up questions. You stay in control and nothing runs unless you say so.

Get started with Beekeeper Studio today

Beekeeper Studio is fast, open source, and cross-platform, with support for Postgres, MySQL, SQLite, MongoDB, and more. And now, with a schema-aware AI pair programmer built in.

The best way to see if it fits your workflow? Try it for yourself.